Information warfare in this age of artificial intelligence has confirmed more than ever what has come to be called “post-truth”, meaning people’s increasing tendency to shape their opinions and beliefs based on emotions rather than facts when it comes to political and public affairs.

People are increasingly rejecting facts and accepting lies, even blatant ones. This is strongly linked to the rumours dominating media outlets and social media, which shockingly numerous studies indicate that people accept This prompted German philosopher Peter Sloterdijk to say that Western societies have become akin to a man who lost trust in doctors and turned to quack healers and charlatans in search of impossible hope.

This has led to the collapse of the fundamental premise of the “infallibility and wisdom of the rational majority”, which is the cornerstone of democracy.

Lies are no longer just random market gossip, but rather a whole industry, that doesn’t only falsify facts but also creates events, according to various studies.

The term post-truth does not simply mean manipulating facts and denying reality, as is commonly understood, but means the difficulty or even impossibility, for most people to reach the truth.

In this context, NATO’s Center of Excellence for Strategic Communications held its latest annual Riga StratCom Dialogue conference in Riga, Latvia between June 7-8, 2023, to discuss how artificial intelligence (AI) is not only enhancing adversaries’ offensive capabilities in the digital sphere, but also weakening societies’ defensive capacities against information manipulation.

Objectives:

First: Key Artificial Intelligence-Enhanced Information Warfare Tools and Risks

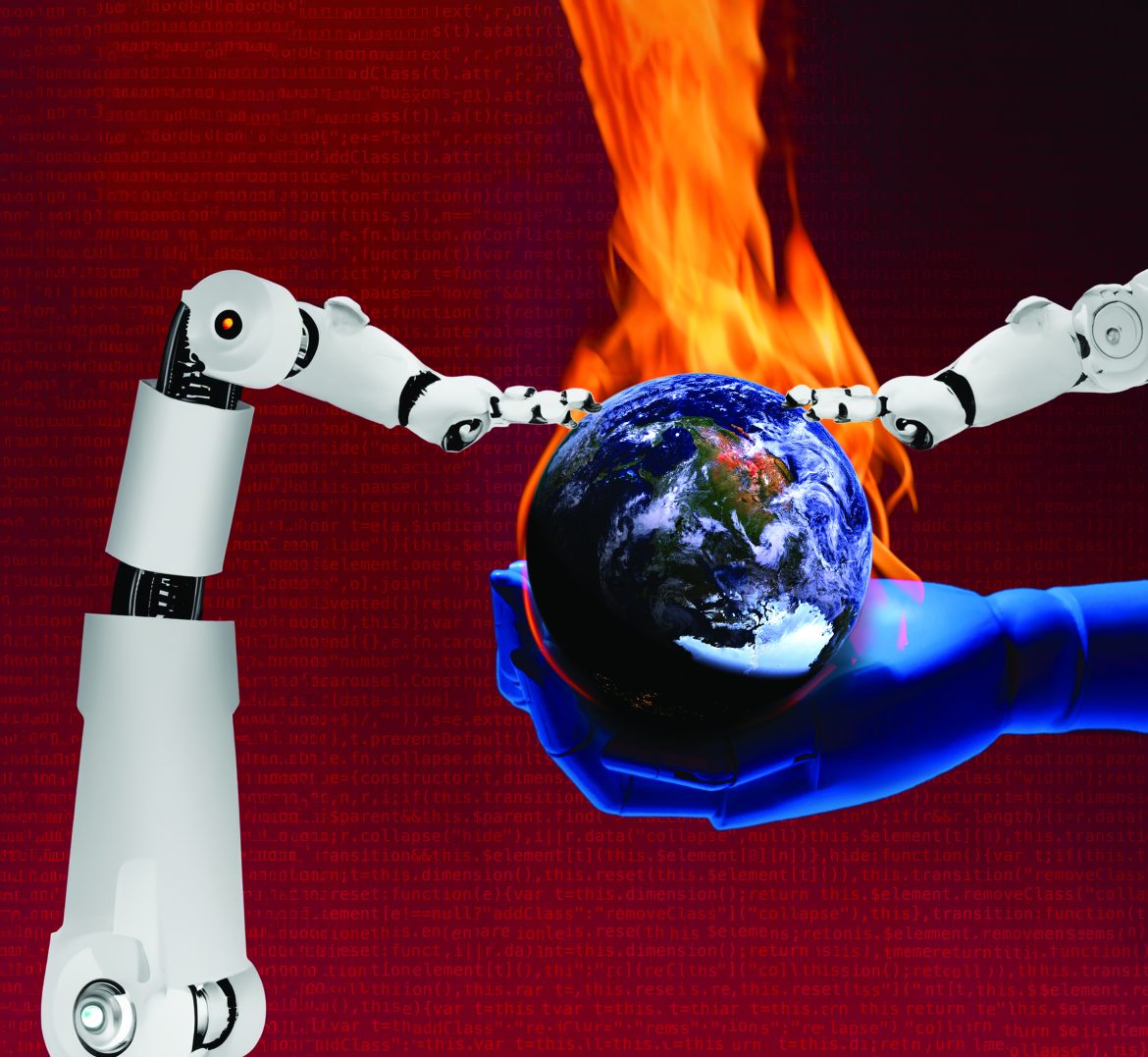

Through text generation programs like ChatGPT and image generators like Midjourney, artificial intelligence can generate and spread misinformation, disinformation or hate speech, leading to information pollution that threatens human progress and international security and peace, as shown in figure No. (1).

The main arena for artificial intelligence-enhanced information warfare is social media, as illustrated in figure No. (2).

A broad segment of the users of these platforms are aware that they are exposed to information warfare, which is sometimes enhanced by AI.

A study of survey data collected from 142 countries found that 58.5% of regular internet and social media users worldwide are concerned about the risk of exposure to false and misleading information online.

Moreover, 83% of respondents in a recent UN opinion survey reported that false and misleading information had a major impact on recent elections in their country/region.

Experts emphasize that the top 10 trends in employing AI for warfare include the use of deepfakes.

For instance, AI can generate fake videos of leaders, officials and commanders making statements potentially causing confusion among the public, falsifying facts, igniting conflicts, and defaming and distorting public, political and artistic figures through expertly forged voice and facial biometrics, making it extremely difficult, especially for the general public, to detect or authenticate any digital content.

Second: Riga StratCom Dialogue: Context, Goals and Outcomes

Conference Context and Goals

According to the conference, the unstable geopolitical landscape requires NATO member states to develop an effective approach to information warfare.

This is especially important following a series of global crises that have shaped today’s and tomorrow’s world, including the covid-19 pandemic, the decline of democracy, the Russia-Ukraine war on Europe’s doorstep, the shift of power to non-Western spheres, the climate emergency, making “Permacrisis” a defining word for the decade.

This means NATO members and possibly many world regions will experience a prolonged period of turmoil and suffering due to seemingly endless difficulties and enormous challenges.

The West, possibly the whole world, has fallen into the “permanent crisis” trap.

Furthermore, information warfare, especially its AI-enhanced tools, has exacerbated the crisis and contributed to its persistence.

Technologies like ChatGPT (which recently gained huge popularity), raise serious concerns about unprecedented information manipulation and as the revolution of artificial intelligence continues we can expect major geostrategic shifts accompanied by artificial intelligence-enhanced information warfare.

These developments require innovative, creative thinking and a dynamic, long-term strategy. For this, the Riga StratCom Dialogue conference was held, bringing together hundreds of top experts, practitioners and policymakers from different disciplines worldwide for vibrant discussions and seeking sustainable solutions. To summarize, the conference aimed to be a platform for in-depth discussions and dialogues on how to effectively assess today’s information environment and identify potential vulnerabilities to be addressed for information security.

Key Conference Sessions and Outcomes

● Approaches and Methodologies Session “Tracking Global Influence Campaigns”.

Chaired by the NATO Strategic Communications Centre of Excellence’s Digital Forensic Research Lab (DFRLab), which took the audience on a journey through case study examples of online information manipulation and influence campaigns using artificial intelligence.

During the session, the supervising team presented recent OSINT investigations that uncovered new aspects of military, political and social affairs in Armenia, Moldova and Sudan, as well as Russia’s ongoing attempts to influence Western Europe in particular and the world in general.

Through interactive practical examples with the session audience via Meta, X and Telegram platforms, the team step-by-step turned them into Digital Sherlocks.

● Deterrence in the Information Environment in the Coming Decades Session

Russia’s invasion of Georgia in 2008 and Ukraine in 2014, the conflict in Syria, migration, and other global challenges were wake-up calls the West ignored in a moment of complacency. The events of 2022 proved that the outbreak of a large-scale conventional war is not just a hypothetical possibility, but a brutal reality happening in the heart of Europe, according to participants in this session.

The core question of this session was: Can the West deter Russia, not only to prevent further bloodshed but also to refrain from carrying out hybrid and foreign interventions in Europe, including in the information sphere and especially using AI-enhanced tools?

● Countering Adversary Narratives Session

According to NATO, China continues to invest in promoting its narratives and visions of the world, exerting a prominent influence across the so-called “Global South”. What amount of information infrastructure data has the Chinese Communist Party amassed under the guise of the Global South? Are NATO and its allies ready for tomorrow’s artificial intelligence-enhanced information battlefields with China and other unfriendly states? On the other hand, Russian narratives and powerful proxies in the information space of many countries in Africa, Latin America and other regions pose a threat to Western narratives. The question this session raised is: What can NATO and its allies do to counter these narratives?

What makes countering these narratives so difficult is the ability to use AI-enhanced tools to produce cheap and high-quality propaganda.

Thanks to its programming capability in different computer languages, programs like ChatGPT for example facilitate the creation of websites and botnets aimed at amplifying narratives and sneakily marketing them.

Third: Proposals for Countering AI-Enhanced Information Warfare and its Challenges

The risks posed by AI tools generating and spreading false information, disinformation, or hate speech on societies’ resilience are indeed real, however, the following approaches can help mitigate their effects:

● A major increase in academic research investments, Conducting more research would help improve our ability to detect messages fabricated by AI tools, especially with the growing capabilities of stylometry, i.e. identifying excessive linguistic expressions, and machine learning.

● Making data collected by companies’ own fact-checking tools available this would empower the efforts aimed at enabling citizens to defend their information and societies. A recent study highlights the useful potential of such corporate programs, including ChatGPT, to improve the efficiency and speed of fact-checking for citizens, if we can guarantee the neutrality of information used by such programs and companies.

● Rethinking the legal frameworks concerning the threat of AI to the cohesion of societies as a whole it is necessary to have clear rules and guidelines to hold companies accountable when their tools are used for deception. Academic consensus seems to support the rapid adaptation of national, even European legal frameworks, to AI provisions, such as those related to copyright or user data protection. Meanwhile, preventive measures can be adopted in the face of threats posed by the authoritarian use of AI.

● Expanding the methods used by governmental entities in their strategic communications this approach should be supported by the positive effects of preventive exposure to misinformation.

● Balancing innovation and safety, Such balance is critical to ensure responsible AI development and use in the information sphere, thus governments need to develop and implement regulatory frameworks to address potential AI risks and effects on the information environment.

● Establishing an ethical framework for responsible AI development and use this framework is critical for information environment security, which is based on transparency, fairness and accountability as the fundamental principles guiding AI development and use in information generation and dissemination. Additionally, AI systems should be designed to align with human values and rights, foster inclusiveness, and avoid bias and discrimination. Moreover, ethical considerations must be an integral part of the AI development lifecycle in the information sphere.

● Public education as a defence against information deception

educating individuals about AI and its uses in information warfare is critical to building a society capable of overcoming such warfare.

● Cooperative partnerships between stakeholders these partnerships can help counter artificial intelligence-enhanced or -generated information warfare. Addressing the challenges posed by AI in the information sphere requires cooperation between AI experts, policymakers and industry leaders, unifying their expertise and perspectives to derive effective solutions.

However, some challenges hinder the implementation or effectiveness of these proposed solutions:

First: Monopolistic practices, as with digital platforms, data from user interactions with AI tools remains monopolized by a handful of corporations and governments.

Second: The technical and financial inadequacy of political decision-makers and the scientific community to effectively counter the threats posed by AI in information deception.

Third: The geopolitical dimensions that hamper efforts for responsible AI use. While calls to establish international centres responsible for monitoring AI developments seem commendable, they remain an unrealistic policy for states and alliances.

Conclusion

Like NATO, many blocs are increasingly convinced that AI’s unchecked progress can turn it into a powerful tool for deception and political disruption. At a May 2023 meeting, the G7 said it plans to rein in AI and launched “Operation Hiroshima” in the Japanese city to hold ministerial-level discussions on rapidly evolving AI technologies and their effects on the information environment.

In the following June, United Nations Secretary-General Antonio Guterres said the world must confront the “monumental international harm” stemming from the spread of hatred and lies online.

Thus, it seems that the modern human information environments will remain threatened by information deception, hate speech and artificial intelligence-enhanced disinformation warfare until we find a way to coexist with it or curb its most dangerous effects.

By: Professor Wael Saleh

(Expert at Trends Research & Advisory Center)